- Frontiers of Machine Learning

- Multimodal Large Language Model and Generative AI

- Smart Earth Observation and Remote Sensing Analysis: From Perception to Interpretation

- 3D Imaging and Display

- Forum on Multimodal Sensing for Spatial Intelligence

- Brain-Computer Interface: Frontiers of Imaging, Graphics and Interaction

- Foundation Models for Embodied Intelligence

- Workshop on Machine Intelligence Frontiers: Advances in Multimodal Perception and Representation Learning

- Human-centered Visual Generation and Understanding

- Spatial Intelligence and World Model for the Autonomous Driving and Robotics

- Seminar on the Growth of Women Scientists

- Video and Image Security in the Era of Large Models Forum

- ICIG 2025 Competition Forum

- Visual Intelligence Session

The forum on “Spatial Intelligence and World Model for the Autonomous Driving and Robotics” aims to explore innovative breakthroughs and industrial applications of cutting-edge technologies such as spatial intelligence and world models in the fields of autonomous driving and robotics. In recent years, the rapid development of AI technology has fueled surging demand in autonomous driving and robotics. Spatial intelligence has enhanced AI’s ability to perceive and interact with 3D environments, while world models enable AI systems to predict and reason autonomously by simulating the dynamics of real-world environments. The integration of these two technologies holds promise for providing autonomous decision-making and adaptability to embodied intelligent systems such as autonomous driving and robotics.

The core objectives of this forum include: (1) exploring theoretical breakthroughs in world models and spatial intelligence, and developing more efficient 4D spatio-temporal modeling methods; (2) discussing the latest innovative applications in autonomous driving and robotics, including digital twins, scene generation, and agent optimization; (3) promoting technical standardization and industrialization, and driving the coordinated development of industry-academia-research collaboration. The forum brings together global top experts, ultimately accelerating the transition of AI from perception to cognition, reducing the cost of autonomous driving technology implementation, enhancing the adaptability of robots in open environments, driving technological innovation and industrial upgrading, and advancing spatial intelligence and world models toward practical applications.

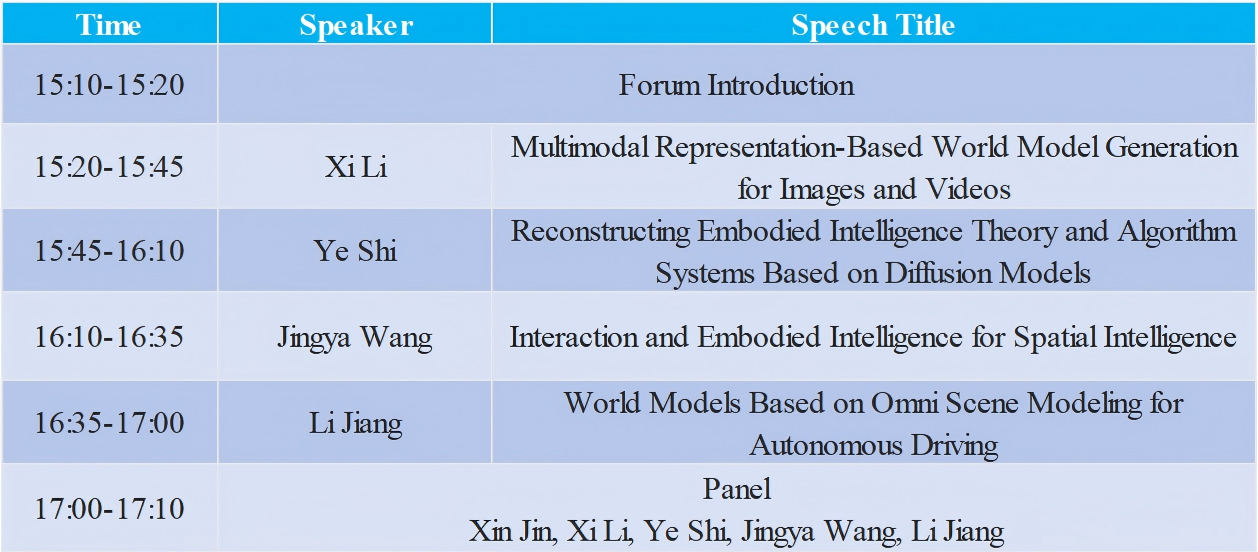

Schedul

Nov. 2nd 15:10-17:10

Organizer

Jingyi Yu

ShanghaiTech University, Professor

Biography:

Professor Jingyi Yu, OSA Fellow, IEEE Fellow, ACM Distinguished Scientist, and Director of the Ministry of Education Key Laboratory of Intelligent Perception and Human-Computer Collaboration. He received a dual bachelor’s degree from the California Institute of Technology (Caltech) in 2000 and a Ph.D. from the Massachusetts Institute of Technology (MIT) in 2005. He is currently the Deputy Provost, Professor, and Dean of the School of Information Science and Technology at ShanghaiTech University. Professor Yu has been engaged in research in the fields of computer vision, computational imaging, computer graphics, and bioinformatics, and has received the NSF Career Award from the U.S. National Science Foundation. In the field of intelligent light field research, he holds over ten international PCT patents, which have been widely applied in smart cities, digital humans, and human-computer interaction scenarios. He also serves as an editorial board member for top-tier journals such as IEEE TPAMI and IEEE TIP, and as program chair for multiple international artificial intelligence conferences (ICCP 2016, ICPR 2020, WACV 2021, CVPR 2021, ICCV 2025). He is a member of the World Economic Forum (WEF) “Global Agenda Council” and serves as the Curator for the Metaverse direction.

Xin Jin

Eastern Institute of Technology, Ningbo, Assistant Professor

Biography:

Xin Jin is an assistant professor and doctoral supervisor at Eastern Institute of Technology, Ningbo, and a member of the Zhejiang Province Young Elite Talent Program. His research covers the cutting-edge fields of intelligent media and computer vision. He has published over 40 high-quality papers, which have been cited over 5,000 times on Google Scholar. Many of his research findings have been applied in products from companies such as Microsoft, Alibaba, and Geely Automobile, generating significant economic value. He has received the President's Special Award from the Chinese Academy of Sciences, the IEEE Circuits and Systems Society's Second Visual Signal Processing and Communications Rising Star Award, and is recognized as one of the top 2% of scientists globally by Stanford University. He serves as a committee member of the IEEE VSPC, the Multimedia Special Interest Group of the CSIG, the Embodied Intelligence Special Interest Group of the CAAI, and the Executive Committee of VALSE. He has organized tutorials and workshops related to spatial representation learning, embodied intelligence, and generative technologies at top conferences such as CVPR, ICCV, NeurIPS, ACMMM, and ECCV.

Presenters

Xi Li

Zhejiang University, Professor

Biography:

Xi Li, Qiushi Distinguished Professor at Zhejiang University, recipient of the National Science Fund for Distinguished Young Scholars, Member of NAAI, Fellow of IAPR/IET/AAIA, IEEE Senior Member, Distinguished Member of CCF/CSIG, National Young Talents Program, Zhejiang Provincial Distinguished Professor, and recipient of the Zhejiang Provincial Fund for Distinguished Young Scholars. His research focuses on artificial intelligence and computer vision, with over 200 high-quality academic publications to his credit. He serves as an editorial board member for international journals (e.g., TMM) and Area Chair for international conferences (e.g., ICCV).

He has led numerous significant research projects, including the Ministry of Science and Technology's 2030 "New Generation AI" Major Project, the NSFC Joint Fund Key Project, the NSFC Mathematical Tianyuan Fund Cross-Disciplinary Key Project, the Ministry of Education Key Planning Research Project, the Military Science Commission Major Special Project, the Zhejiang Provincial Fund Major Project, the Zhejiang Provincial Distinguished Young Scholars Project, and the Ningbo Science & Technology Innovation 2035 Key R&D Project. As the primary completer, he has received several awards, including: 2023 China Invention Association Invention Entrepreneurship Award Innovation Prize (First Class), 2025, 2024, and 2022 Huawei "Challenge Benchmarking" Spark Value Award, 2023 Lu Zengyong CAD&CG High-Tech Award (First Class), 2021 Huawei Outstanding Technical Cooperation Achievement Award, 2021 China Industry-University-Research Collaboration Innovation and Promotion Award (Individual Award), 2021 China Society of Image and Graphics (CSIG) Natural Science Award (Second Class). Additionally, he has contributed to award-winning projects as a team member: 2022 Ministry of Education Science and Technology Progress Award (First Class; Rank 2), 2021 China Electronics Society Science and Technology Progress Award (First Class; Rank 4), 2013 China Patent Excellence Award (Rank 5), 2012 Beijing Municipal Science and Technology Award (First Class; Rank 9). He has also been honored with four Best Paper Awards. Under his supervision, his students have received the CSIG Doctoral Dissertation Incentive Plan Award and the Zhejiang Provincial Outstanding Master's Thesis Award.

Speech Title: Multimodal Representation-Based World Model Generation for Images and Videos

Abstract:Currently, image and video generation represents a prominent yet challenging frontier in AI, particularly the underlying modeling of implicit interactive world models. This report provides an in-depth analysis from multiple perspectives, centered on data-driven AI learning methodologies, encompassing efficient multimodal generation, comprehension, and representation. It systematically reviews the distinct developmental stages within the field of multimodal feature representation and learning. Furthermore, it introduces a series of representative research endeavors and their practical applications undertaken in recent years, focusing on visual semantic analysis, understanding, and generation via feature learning. Special attention is given to the potential application of these technologies in constructing video generation-driven, real-time interactive world simulators. World simulators aim to emulate the evolutionary processes of the real world by generating dynamic video sequences that adhere to physical laws, thereby providing a foundation for decision-making, simulation, and content creation. This imposes core requirements on generative models regarding **efficiency, controllability, temporal consistency, and physical plausibility**. The report concludes by discussing several open challenges and problems in the realm of multimodal visual generation and understanding.

Ye Shi

ShanghaiTech University, Researcher

Biography:

Dr. Ye Shi is currently an Assistant Professor, Researcher, and PhD Supervisor at the School of Information Science and Technology, ShanghaiTech University, and the Director of the YesAI Trustworthy and General Intelligence Laboratory. In recent years, he has published over 70 papers in top conferences and journals (NeurIPS, ICML, ICLR, CVPR, ICCV, TNNLS, TSG, etc.). His research focuses on controllable, robust, and secure artificial intelligence theories, algorithms, and applications, with a systematic exploration of the theoretical foundations of controllable diffusion models and their applications in embodied intelligence. Dr. Ye Shi serves as Area Chair for NeurIPS 2025 and organizes the Human-Computer Interaction and Collaboration Workshop at ICCV 2025. He has been selected for the Shanghai Overseas Leading Talent Program and the Shanghai Yangfan Program, and has secured a National Natural Science Foundation grant. He has received the National Outstanding Overseas Student Award, the Outstanding Paper Award at the Generative Theory Workshop at ICLR 2025, and the Best Paper Award at IEEE ICCSCE 2016.

Speech Title:Reconstructing Embodied Intelligence Theory and Algorithm Systems Based on Diffusion Models

Abstract:This report summarizes the team’s research achievements in the field of diffusion model-driven embodied intelligence. Theoretically, two major innovations are proposed: DSG establishes a loss-guided error lower bound theory through spherical Gaussian constraints, achieving zero training cost for manifold constraint acceleration; the UniDB framework unifies diffusion bridge methods based on stochastic optimal control, revealing universal laws for which traditional methods are special cases. At the algorithm level, two reinforcement learning engines are developed: QVPO unifies exploration and exploitation in an off-policy reinforcement learning framework; and GenPO establishes the first diffusion-driven on-policy reinforcement learning paradigm. In terms of validation, cross-domain generalization is achieved: AffordDP combines visual models with point cloud registration to achieve cross-category generalization; DreamPolicy achieves zero-shot generalization on complex terrains for humanoid robots through terrain-aware diffusion. These achievements establish a complete technical chain from generative modeling to decision control, providing theoretical, practical, and efficient solutions for embodied intelligence.

Jingya Wang

ShanghaiTech University, Researcher

Biography:

Dr. Jingya Wang is currently a Researcher, Assistant Professor, and PhD Supervisor at the School of Information Science and Technology, ShanghaiTech University. Her research interests focus on human-centered 3D interaction and embodied intelligence. She has published over 50 papers in top-tier conferences and journals in computer vision, including over 40 papers in CCF-A class journals. She has served as Area Chair for conferences such as CVPR, NeurIPS, ICML, ICCV, ECCV, and ACM MM. She has been selected for the Shanghai Overseas Leading Talent Program and the Shanghai Yangfan Program, and has led projects such as the National Natural Science Foundation of China. She received the 2018 CVPR Doctoral Consortium Award, and her first-authored paper was selected as one of the “Best of CVPR Papers” by Computer Vision News Magazine in 2018. In 2023, she was included in Baidu's AI Chinese Women Young Scholars List. She has been nominated for the Best Paper Award at the 2024 ACM Design Automation Conference and the Best Paper Award at the 2024 ACM Multimedia Conference.

Speech Title:Interaction and Embodied Intelligence for Spatial Intelligence

Abstract:One of the main challenges in embodied intelligence research lies in the scarcity of data and the high cost of collecting real-world data. How to effectively utilize vast amounts of online data and prior knowledge of human interactions in the real world to guide robot learning has become a key issue. In this report, we will delve into how to extract high-precision 3D motion interaction information in open environments to enhance the robustness of full-body motion control in humanoid robots. We will also share our research findings on 3D interaction extraction, 3D interaction reasoning, 3D interaction simulation, and their applications in intelligent decision-making. We will explore how to enhance embodied intelligence’s generalization capabilities in open worlds by learning from vast amounts of human interaction prior knowledge, thereby improving its performance in new environments and with new objects.

Li Jiang

The Chinese University of Hong Kong, Shenzhen, Assistant Professor

Biography:

Li Jiang is an Assistant Professor in the School of Data Science and a Presidential Young Scholar at The Chinese University of Hong Kong, Shenzhen. She received her Ph.D. from The Chinese University of Hong Kong in 2021 and subsequently worked as a postdoctoral researcher at the Max Planck Institute. Her research focuses on computer vision and artificial intelligence, specifically in areas such as 3D scene understanding, autonomous driving, spatial intelligence, world models, representation learning, and multimodal learning. Her work has been published in top-tier conferences and journals including CVPR, ICCV, ECCV, NeurIPS, TPAMI, and IJCV, with several papers selected for oral presentations and highlights. Her Google Scholar citation count exceeds 12,000. Her research on motion prediction for autonomous driving achieved first place in the CVPR Waymo Open Dataset Motion Prediction Challenge for three consecutive years (2022-2024). She was named to the 2024 World's Top 2% Scientists list by Stanford University and Elsevier for annual impact, as well as the "Top 50 Global Female AI Talent List" jointly released by UNIDO-ITPO (Beijing) and DONGBI DATA. She has also received funding from national-level young talent programs.

Speech Title:World Models Based on Omni Scene Modeling for Autonomous Driving

Abstract:As autonomous driving systems increasingly demand generalization, comprehension, and prediction capabilities, building a world model with universal world knowledge has become critically important. This presentation will introduce our recently proposed self-supervised general world model, DriveX, designed to construct generalized and predictive world knowledge representations for autonomous driving systems. The core of DriveX is the Omni Scene Modeling (OSM) module, which unifies geometric structures, semantic information, and visual details into a Bird's-Eye View (BEV) latent space through self-supervision, forming a unified and spatially aware world representation. To enhance modeling quality and transferability, DriveX employs a decoupled learning strategy that effectively separates representation learning from future state modeling, improving dynamic scene understanding. Additionally, the proposed future spatial attention mechanism dynamically aggregates the world model’s future predictions and adapts them to various downstream tasks, such as planning and prediction, in a unified paradigm. In the future, we plan to further explore the role of world models in autonomous driving data generation and closed-loop simulation, advancing their potential in building controllable, safe, and efficient intelligent driving systems.