- Frontiers of Machine Learning

- Multimodal Large Language Model and Generative AI

- Smart Earth Observation and Remote Sensing Analysis: From Perception to Interpretation

- 3D Imaging and Display

- Forum on Multimodal Sensing for Spatial Intelligence

- Brain-Computer Interface: Frontiers of Imaging, Graphics and Interaction

- Foundation Models for Embodied Intelligence

- Workshop on Machine Intelligence Frontiers: Advances in Multimodal Perception and Representation Learning

- Human-centered Visual Generation and Understanding

- Spatial Intelligence and World Model for the Autonomous Driving and Robotics

- Seminar on the Growth of Women Scientists

- Video and Image Security in the Era of Large Models Forum

- ICIG 2025 Competition Forum

- Visual Intelligence Session

Multimodal perception for spatial intelligence is a core pathway to break through the current bottlenecks in AI cognition. By fusing multi-source data such as vision and depth to construct a three-dimensional "world model," it systematically addresses technical pain points in cross-modal collaboration, including fundamental issues of spatial perception like data heterogeneity and physical rule modeling. This significantly enhances the spatial reasoning capabilities of machine intelligence, supports technological iterations in key areas such as autonomous driving and embodied intelligence, and promotes the deep integration of cognitive science and AI. Meanwhile, spatial intelligence, by integrating 3D environmental perception and multimodal data processing capabilities, helps advance the application of intelligent technologies in fields like autonomous driving, manned spaceflight, and deep-sea exploration. It also represents a new strategic high ground and battleground in technological competition, as artificial intelligence is prioritized by major global economies.

This Forum on Multimodal Perception for Spatial Intelligence aims to promote technical exchange on spatial multimodal perception collaboration, discuss future breakthrough directions, explore how spatial intelligence and multimodal perception can address industry pain points, drive innovation in the spatial intelligence field, and foster the field as a source of technology and an engine for application.

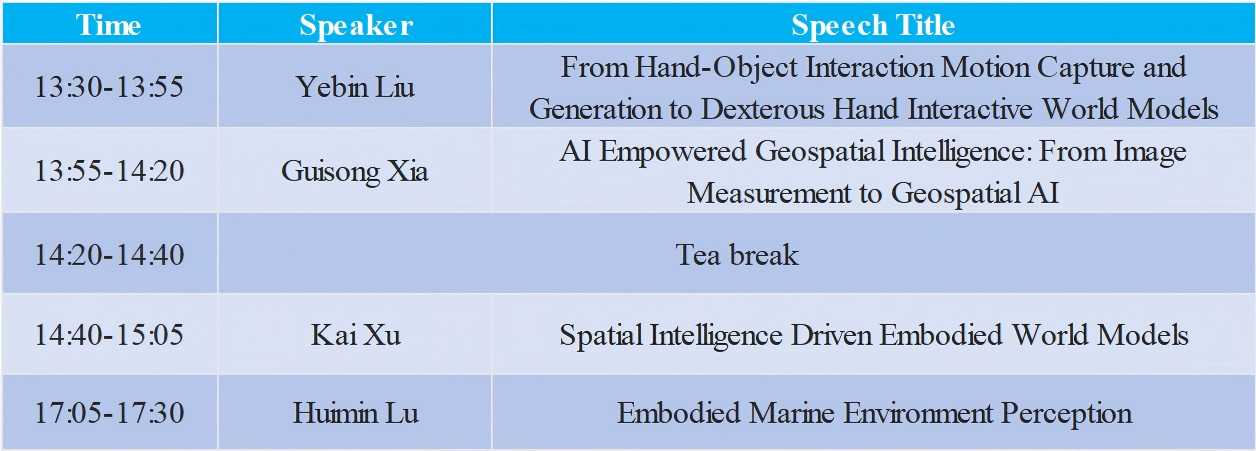

Schedul

Nov. 1st 13:30-15:30

Organizer

Qi Zhang

Tongji University, Professor

Biography:

Qi Zhang is a Tenured Associate Professor in the College of Computer Science and Technology at Tongji University. He received his Ph.D. in Computer Science and Technology from Beijing Institute of Technology and a Ph.D. in Analytics from the University of Technology Sydney. He has been selected for the National Overseas Young Talents Program, the Shanghai Pujiang Talent Program, and the Shanghai Magnolia Award for Overseas Young Talents. He has presided over and participated in multiple projects including the National Natural Science Foundation of China (NSFC) Young Scientists Fund, key R&D programs of the Ministry of Science and Technology, and NSFC projects. His main research interests include multimodal information processing, specifically brain vision encoding/decoding, time series analysis, and multimodal model compression. He has published over 60 papers in top international journals and conferences in artificial intelligence and data science, including TKDE, TNNLS, NeurIPS, ICML, ICLR, AAAI, IJCAI, ACM MM, and KDD. He serves as Deputy Secretary-General of the CSIG Young Scientists Committee.

Xiangbo Shu

Nanjing University of Science and Technology, Professor

Biography:

Xiangbo Shu is a Professor/Ph.D. Supervisor in the School of Computer Science and Engineering at Nanjing University of Science and Technology (NJUST), Deputy Director of the MIIT Key Laboratory of "Social Security Information Perception and Systems," and recipient of the National Science Fund for Excellent Young Scholars and the Jiangsu Province Science Fund for Distinguished Young Scholars. His recent research focuses on computer vision, multimedia, and human behavior computing. He has published nearly 100 academic papers in international journals/conferences such as TPAMI, TIP, TNNLS, CVPR, ICCV, and ACM MM, including 8 ESI highly cited papers. He has received the Natural Science First Prize from the Chinese Electronics Society, ACM MM 2015 Best Paper Nomination, MMM 2016 Best Student Paper, Jiangsu Outstanding Doctoral Dissertation, and CAAI Outstanding Doctoral Dissertation. He has been listed in the Global Top 2% Scientists ranking from 2021-2024 and selected for the Jiangsu 333 High-Level Talent Training Project. He has presided over or participated in national projects including NSFC Key/General/Youth projects, key national R&D program topics, and national defense basic research projects. He serves as Deputy Secretary-General of the CSIG Young Scientists Committee and serves on the editorial boards of journals such as IEEE TNNLS, IEEE TCSVT, and Pattern Recognition.

Presenters

Yebin Liu

Tsinghua University, Professor

Biography:

Yebin Liu is a Tenured Professor in the Department of Automation at Tsinghua University and a recipient of the National Science Fund for Distinguished Young Scholars. His research focuses on 3D vision, 3D and 4D content generation, and digital humans. He has published over 100 papers in venues like TPAMI, SIGGRAPH, CVPR, and ICCV, with over 13,000 Google Scholar citations. Research outcomes have been licensed to 8 leading companies (e.g., Huawei, ByteDance, SenseTime). He serves on the editorial board of IEEE TVCG and has served as an area chair for CVPR, ICCV, and ECCV, and as a technical committee member for SIGGRAPH Asia. He is the Director of the CSIG 3D Vision Technical Committee. He received the 2012 National Technology Invention First Prize (Rank 3) and the 2019 Chinese Electronics Society Technology Invention First Prize (Rank 1).

Speech Title: From Hand-Object Interaction Motion Capture and Generation to Dexterous Hand Interactive World Models

Abstract:Dexterous hand interaction and manipulation with objects represent core difficulties and challenges for digital humans and humanoid robots. The data and priors of human hand interactions with the environment and objects in the real world form the foundation of the world model for humanoid robot interaction. How to reconstruct and generate real-world human hand motions, and subsequently build dexterous hand motion world models based on multimodal large models, is a central issue in digital human and humanoid robot research. This report centers on tasks such as motion capture and generation for hand-object manipulation, hand-object interaction video generation, and mechanical dexterous hand world interaction. It analyzes the relationship between data acquisition for embodied intelligence (real robot data, simulation data, human data), introduces the speaker's and international cutting-edge progress, and discusses future trends and opportunities.

Guisong Xia

Wuhan University, Professor

Biography:

Guisong Xia is a Second-Class Professor and Hongyi Distinguished Professor at Wuhan University, currently serving as Associate Dean of the School of Artificial Intelligence and Deputy Director of the National Engineering Research Center for Multimedia Software. He has long been engaged in research on computer vision, machine learning, intelligent unmanned systems, and remote sensing intelligent information processing. He has presided over more than 20 government-funded research projects, including the NSFC Fund for Distinguished Young Scholars, Excellent Young Scholars, Joint Key Fund, Major Research Plan, and key R&D program topics. He has published over 150 papers in top-tier journals and conferences, which have been successfully applied in national significant engineering projects and related operational scenarios. His honors include one Hubei Provincial Natural Science First Prize, three First Prizes for China's Surveying and Mapping Science and Technology Progress, the IEEE Geoscience and Remote Sensing Society (GRSS) Most Influential Paper Award, and the honor of Outstanding Doctoral Dissertation Supervisor from the China Society of Image and Graphics (CSIG). He also serves on the editorial boards of two SCI Zone 1 journals and is the Deputy Director of the Remote Sensing Image Committee of CSIG.

Speech Title:AI Empowered Geospatial Intelligence: From Image Measurement to Geospatial AI

Abstract:Image measurement plays a core role in tasks such as urban modeling, map updating, and 3D reconstruction. However, with the diversification of remote sensing image acquisition methods and the increasing application demands, the traditional paradigm relying on rule design and limited samples can no longer meet the requirements for high-precision, high-throughput intelligent interpretation in complex geographic scenes. In recent years, artificial intelligence has accelerated its empowerment of the geospatial field, giving rise to a new "AI4Geo" paradigm represented by large remote sensing models. This presentation focuses on the evolution from image measurement to intelligent interpretation, introducing our research progress in large model-driven remote sensing information extraction, structural expression, and semantic understanding, as well as its practical applications in tasks such as building extraction from high-resolution remote sensing images.

Kai Xu

National University of Defense Technology, Professor

Biography:

Kai Xu is a Professor at the National University of Defense Technology (NUDT) and was a Visiting Scholar at Princeton University. His research focuses on computer graphics, 3D vision, embodied intelligence, digital twins, and related fields. He was among the early pioneers internationally in data-driven 3D perception, modeling, and interaction, proposing a theoretical and methodological system for structured 3D perception, modeling, and interaction in complex scenes, which has been scaled and applied in areas such as intelligent manufacturing. He has presided over research projects including the Class A (for Distinguished Young Scholars) and Class B (for Excellent Young Scholars) funds under the NSFC Young Scientists Fund, as well as Key Programs of the NSFC. He has published over 100 A-category papers in journals such as TOG, TPAMI, TVCG, and TIP. He has been recognized on the global list of Top 2% Most Cited Scientists. He serves on the editorial boards of premier international graphics journals including ACM Transactions on Graphics and IEEE Transactions on Visualization and Computer Graphics, and is an Executive Area Editor for Computational Visual Media. He has served as General Chair and Program Chair for major conferences in his field. He holds the position of Deputy Director of the Intelligent Graphics Technical Committee of the China Society of Image and Graphics (CSIG) and Deputy Director of the Geometric Design and Computing Technical Committee of the China Society for Industrial and Applied Mathematics (CSIAM). His awards include two Hunan Provincial Natural Science First Prizes (Rank 1 and 3), two Natural Science First Prizes from the China Computer Federation (CCF) (Rank 1 and 3), the Army Science and Technology Progress Second Prize, the Army Teaching Achievement Second Prize, and the Young Scientist Award from the Chinese Institute of Electronics (CIE).

Speech Title:Spatial Intelligence Driven Embodied World Models

Abstract:Training embodied intelligent systems in real physical environments is expensive. The mainstream approach relies on simulation-based learning and Sim2Real transfer. However, building simulation platforms with high fidelity and strong generalization is challenging. Although generative AI has provided a new paradigm for world model learning, "world foundational models" based on pixel-level generation require vast computational power and data. Breakthroughs in spatial intelligence may lead to a technological path reconstruction. By leveraging multimodal pre-trained large models, we aim to construct world models that integrate structured representations (such as dynamic scene graphs) and mechanistic constraints (such as physics-based dynamics) for general scene analysis and long-term dynamic predictions. This approach significantly improves the interpretability and real-to-virtual transferability of embodied intelligence. This talk will report on our work on building and learning structured and mechanistic world models based on spatial intelligence, as well as efficient embodied manipulation based on these world models.

Huimin Lu

Southeast University, Professor

Biography:

Huimin Lu, Chief Professor at Southeast University and Executive Dean of the Ocean Institute of Advanced Studies, is a recipient of the National High-Level Talent Program. She is a highly cited scholar in the field of security from Coreweave, the winner of the 2024 Japan Research Frontier Award, and has published more than 100 academic papers, including over 40 CCF/CAA A-level papers. Her research has been cited over 6,000 times in SCI, and she has authored more than 30 ESI highly cited or hot papers. She holds two Japanese patents, one PCT patent, and four Chinese patents. Additionally, she has received more than 20 honors, including the IEEE Fukuoka Branch Excellent Student Research Award, the IEEE CAS Society Best Paper Award, the IEEE CIS Outstanding Paper Award, the International Contribution Award from the Japan Ministry of Land, Infrastructure, Transport and Tourism, and the National Outstanding Self-financed Overseas Student Award. Professor Lu serves as the Editor-in-Chief of Cognitive Robotics and is an editorial board member for Applied Soft Computing, Wireless Networks, IEEE/CAA Journal of Automatica Sinica, and Pattern Recognition. She is also a Senior Member of IEEE, the Chair of the IEEE Computer Society Big Data Technical Committee, and her research areas include ocean observation, robotics, artificial intelligence, and the Internet of Things.

Speech Title: Embodied Marine Environment Perception

Abstract:Environmental perception technology enables robots to accurately sense the changes in their surrounding environment, including vision, hearing, and tactile perception. By using these modes of perception, robots can gather detailed information about their surroundings, providing the basis for subsequent decisions and actions. This talk will introduce the research progress of embodied environmental perception technologies in the field of marine robotics and discuss the challenges in practical engineering applications.