- Frontiers of Machine Learning

- Multimodal Large Language Model and Generative AI

- Smart Earth Observation and Remote Sensing Analysis: From Perception to Interpretation

- 3D Imaging and Display

- Forum on Multimodal Sensing for Spatial Intelligence

- Brain-Computer Interface: Frontiers of Imaging, Graphics and Interaction

- Foundation Models for Embodied Intelligence

- Workshop on Machine Intelligence Frontiers: Advances in Multimodal Perception and Representation Learning

- Human-centered Visual Generation and Understanding

- Spatial Intelligence and World Model for the Autonomous Driving and Robotics

- Seminar on the Growth of Women Scientists

- Video and Image Security in the Era of Large Models Forum

- ICIG 2025 Competition Forum

- Visual Intelligence Session

With the continuous evolution of deep learning technologies, artificial intelligence is rapidly advancing from single-modal understanding to multimodal integration. Multimodal AI, capable of synergizing diverse information sources such as vision, language, audio, and touch, not only enhances perceptual and reasoning abilities in complex environments but is also regarded as a critical pathway toward next-generation AI. Humans inherently possess multimodal learning capabilities, enabling holistic understanding even with incomplete information. Consequently, equipping machines with similar abilities has become a major focus for both academia and industry.

On another front, advances in 3D visual perception, closely integrated with multimodal fusion, are playing a central role in numerous fields including robotic navigation, human-computer interaction, augmented reality, digital twins, and smart manufacturing. By integrating various data sources such as visual, depth, infrared, and event-based information, multimodal 3D perception systems significantly improve an agent’s ability to interpret and interact with complex environments.

Machine Intelligence Research (MIR), a high-impact international journal led by Professor Tan Tieniu and sponsored by the Institute of Automation, Chinese Academy of Sciences, has long been committed to promoting cutting-edge exchanges and disseminating research outcomes in machine intelligence. Building on MIR’s established academic influence and brand reputation, this forum integrates two major frontier directions—“multimodal representation learning” and “3D visual perception”—to actively respond to current research trends while providing a valuable platform for interdisciplinary collaboration.

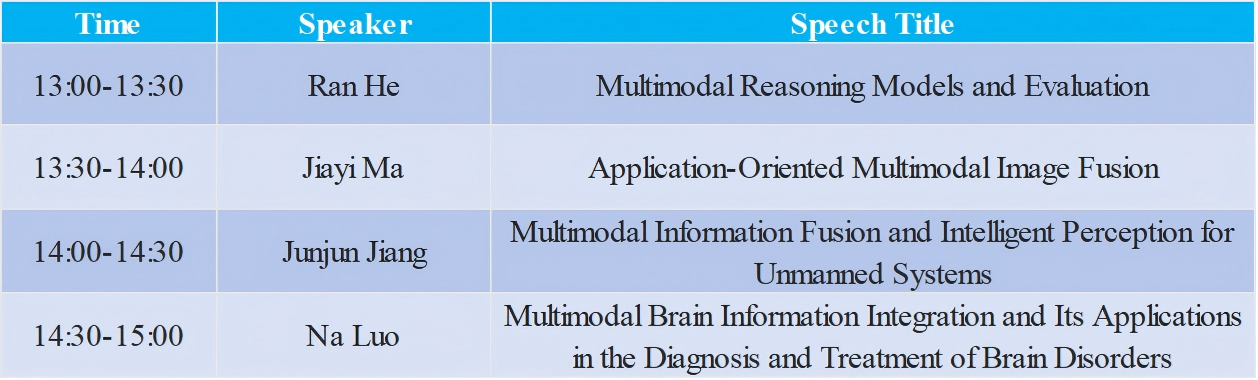

Schedul

Nov. 2nd 13:00~15:00

Organizer

Hui Zhang

Hunan University, Professor

Biography:

Hui Zhang (b. 1983) is a Full Professor and doctoral supervisor at Hunan University, where he serves as Dean of the School of Artificial Intelligence and Robotics and Dean of the School of Future Technology. He is also Deputy Director of the National Engineering Research Center for Visual Perception and Control Technology. He has been selected for the National High-Level Talent Program and serves on the Expert Group on Intelligent Robotics for China’s Ministry of Science and Technology.His research focuses on robotic vision, image recognition, and intelligent manufacturing. He has led over 20 national projects, published more than 60 papers, and holds over 50 invention patents. His work has received major awards including the Hunan Provincial Science and Technology Progress Award (First Prize, 2022–2023), the National Technological Invention Award (Second Prize, 2018), and the National Teaching Achievement Award (Second Prize, 2022).

Deng-Ping Fan

Nankai University, Professor

Biography:

Deng-Ping Fan is a Full Professor and deputy director of the Media Computing Lab (MCLab) at the College of Computer Science, Nankai University, China. Before that, he was postdoctoral, working with Prof. Luc Van Gool in Computer Vision Lab @ ETH Zurich. From 2019-2021, he was a research scientist (PI) and team lead of IIAI-CV&Med in IIAI, working with Prof. Ling Shao and Prof. Jianbing Shen. He received his Ph.D. from Nankai University in 2019 under the supervision of Prof. Ming-Ming Cheng. His research interests are in Computer Vision, Machine Learning, and Medical Image Analysis. Specifically, he focuses on Dichotomous Image Segmentation (General Object Segmentation, Camouflaged Object Segmentation, Saliency Detection), Multi-Modal AI. He is an outstanding member of CSIG and is one of the core technique members in TRACE-Zurich project on automated driving.

Presenters

Ran He

Institute of Automation, Chinese Academy of Sciences, Researcher

Biography:

Ran He is a Researcher at the National Key Laboratory of Multimodal Artificial Intelligence Systems, Institute of Automation, Chinese Academy of Sciences. He is an IAPR and IEEE Fellow, and currently serves as an Associate Editor-in-Chief of IEEE TIFS. His research interests include artificial intelligence, pattern recognition, and computer vision. He has undertaken projects such as the NSFC Excellent Young Scholars (ABC categories) and the Beijing Outstanding Young Scientist Fund. He has published 23 papers in leading journals such as IEEE TPAMI and IJCV, including 11 first-author papers with over 100 citations each. His research has been recognized with the First Prize of the CAAI Technical Invention Award, the First Prize of the CSIG Natural Science Award, and the Second Prize of the Beijing Science and Technology Progress Award. He has supervised students who received honors such as the IEEE SPS Best Young Author Award, ICPR Best Scientific Paper Award, and multiple Excellent Doctoral Dissertation Awards (Beijing, CAS, IEEE Biometrics Council).He has served as a Senior AE of IEEE TIP, and as an editorial board member for journals including IEEE TPAMI, TCSVT, TBIOM, IJCV, PR, TMLR, and Acta Automatica Sinica, earning four Best AE/Editor Awards. He has also served as Area Chair for major conferences including NeurIPS, ICML, ICCV, CVPR, ECCV, ICLR, AAAI, and IJCAI.

Speech Title: Multimodal Reasoning Models and Evaluation

Abstract:In recent years, large multimodal foundation models, represented by GPT-4o, have become a new research frontier. In particular, leveraging large language models for multimodal perception and reasoning has demonstrated emerging capabilities that highlight the potential path toward artificial general intelligence. This talk will trace the evolution from large language models and vision foundation models to large multimodal foundation models. It will provide a comprehensive overview of key aspects, including data, evaluation, architectures, training, and applications, while also discussing current challenges and future research directions.

Jiayi Ma

Wuhan University, Professor

Biography:

Jiayi Ma is a Professor and Ph.D. supervisor at the School of Electronic Information, Wuhan University. His research interests include computer vision and information fusion. He has published over 200 papers in top-tier journals and conferences such as CVPR, ICCV, IEEE TPAMI, IJCV, and Cell. He received the Qian Xuesen Best Paper Award and the Information Fusion Best Paper Award. His work has been cited over 40,000 times on Google Scholar with an H-index of 92. He received the First Prize of the Hubei Provincial Natural Science Award (1st contributor) and currently serves as Associate Editor for Information Fusion, IEEE TIP, and IEEE/CAA JAS.

Speech Title:Application-Oriented Multimodal Image Fusion

Abstract:Image fusion aims to integrate complementary information from multiple source images into a single composite image that provides a comprehensive representation of the scene, thereby significantly enhancing target recognition, scene perception, and environmental understanding. This talk focuses on application-oriented multimodal image fusion, analyzing the current challenges and opportunities in the field and systematically introducing several representative approaches. The discussion will highlight key directions, including unregistered multimodal image fusion, task-cooperative fusion across low- and high-level vision, and fusion methods with robustness to degradation and controllability via textual guidance. Typical application scenarios will also be presented to demonstrate the practical value and broad prospects of application-oriented image fusion.

Junjun Jiang

Harbin Institute of Technology, Professor

Biography:

Junjun Jiang is a Tenured Professor and Ph.D. supervisor at the School of Computer Science, Harbin Institute of Technology, and currently serves as Associate Dean of the School of Artificial Intelligence. He was selected into the National Youth Talent Program. He received his Ph.D. degree from the School of Computer Science, Wuhan University, in December 2014, and worked as a Specially Appointed Researcher at the National Institute of Informatics, Japan, from 2016 to 2018. His research interests mainly include image processing, computer vision, and deep learning. He has published over 100 papers in IEEE Transactions journals and CCF-A conferences, with more than 22,000 citations on Google Scholar and an H-index of 67. He has been recognized as a Highly Cited Researcher and ranked among the top 0.05% of scientists worldwide. He serves as an Editorial Board Member of Information Fusion (Best Editor Award, 2024), and as a Youth Editorial Board Member of Fundamental Research and IEEE/CAA JAS. He is a recipient of the Wu Wenjun AI Outstanding Youth Award and the CCF Excellent Doctoral Dissertation Award. He has led projects funded by the National Key R&D Program of China and the National Natural Science Foundation of China (key, general, and youth programs).

Speech Title: Multimodal Information Fusion and Intelligent Perception for Unmanned Systems

Abstract:In recent years, with the rapid rise and development of deep learning, supervised learning based on large-scale labeled datasets has achieved breakthrough performance in specific computer vision tasks under closed scenarios. However, the performance of such methods is gradually saturating, and their further advancement faces challenges posed by real-world open environments. To address the critical need for multimodal fusion and perception in unmanned platforms operating in complex field environments, our research focuses on fundamental issues such as few-shot learning, weak supervision, multi-source heterogeneity, and cross-domain adaptation. These efforts aim to provide technological support for intelligent perception and autonomous decision-making of unmanned platforms in challenging environments. This talk will highlight our recent research progress on multimodal information fusion and perception in open-world scenarios.

Na Luo

Institute of Automation, Chinese Academy of Sciences, Assistant Professor

Biography:

Na Luo, Ph.D., is an Associate Professor at the Institute of Automation, Chinese Academy of Sciences, a Master's Supervisor, and Deputy Director of the Beijing Key Laboratory of Brainnetome and Brain-Computer Interface. She also serves as an Adjunct Professor at Taiyuan University of Technology. Her research primarily focuses on multimodal fusion and its application on precision diagnosis and treatment for psychiatric disorders. She has led multiple research projects, including those funded by the National Natural Science Foundation of China, sub-tasks of the National Key R&D Program, and the Postdoctoral Science Foundation. Dr. Luo has published over 30 high-impact papers in prestigious journals, including Trends in Cognitive Sciences, Ebiomedicine, and the British Journal of Psychiatry. Additionally, she serves as a Committee Member of the Brain Atlas Committee of the China Society of Image and Graphics (CSIG) and Secretary-General of the Brain Science and Artificial Intelligence Committee of the Chinese Association for Artificial Intelligence (CAAI).

Speech Title: Multimodal Brain Information Integration and Its Applications in the Diagnosis and Treatment of Brain Disorders

Abstract:Early diagnosis and precise intervention for psychiatric disorders have consistently been among the most challenging and critical areas of clinical research. Multimodal fusion methods, capable of integrating cross-modal, multi-source heterogeneous information—such as brain structure, brain function, and genetics—provide a vital perspective for intelligent diagnosis and treatment of psychiatric disorders. This presentation will first summarize commonly used multimodal fusion methods, as well as how to integrate multimodal information to construct a digital twin brain. Finally, it will explore the applications of multimodal fusion methods in identifying diagnostic biomarkers for psychiatric disorders and determining neuromodulation therapy targets.