- Frontiers of Machine Learning

- Multimodal Large Language Model and Generative AI

- Smart Earth Observation and Remote Sensing Analysis: From Perception to Interpretation

- 3D Imaging and Display

- Forum on Multimodal Sensing for Spatial Intelligence

- Brain-Computer Interface: Frontiers of Imaging, Graphics and Interaction

- Foundation Models for Embodied Intelligence

- Workshop on Machine Intelligence Frontiers: Advances in Multimodal Perception and Representation Learning

- Human-centered Visual Generation and Understanding

- Spatial Intelligence and World Model for the Autonomous Driving and Robotics

- Seminar on the Growth of Women Scientists

- Video and Image Security in the Era of Large Models Forum

- ICIG 2025 Competition Forum

- Visual Intelligence Session

Large generative models have recently gained significant attention for their strong reasoning and generalization capabilities which throws a light for artificial general intelligence. Typical large-scale generative AI models include large-scale language model (e.g., ChatGPT, DeepSeek) and large-scale vision model (e.g., SAM). The great success of large AI model has motivated the researchers in multimedia community to explore Large Multi-modal Models for multi-modality understanding (e.g., Clip) as real-world data often comes from multiple modalities, such as images and text, video and audio, or sensor data from multiple sources. Based on large amount of training sources and large model, these generative AI models have demonstrated powerful ability to tackle a series of multimedia tasks, especially scene understanding, such as dialogue, robotics navigation, image segmentation and so on.

However, for real-world applications, the current generative AI models face many challenges caused by dynamic visual scenes and visual content generation, such as multiple-modalities data alignment, domain shift, noisy data/label issue and novel object/patterns discover. Also, for video scene understanding, how to incorporate temporal consistency and coherence properties into the generative AI model is also a challenge. Moreover, the visual content generation face the physical constraint and diversity discover issues. Therefore, it is meaningful to study the multi-modal generative AI for dynamic visual scene and visual content generation.

Schedul

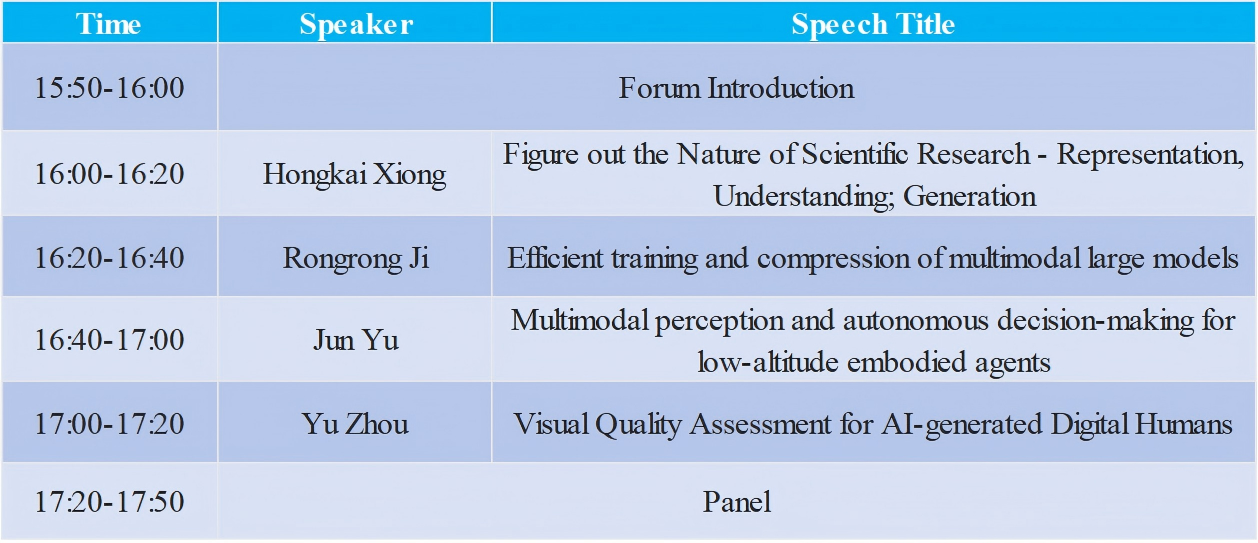

Oct. 31th 15:50-17:50

Organizer

Wei Wang

Beijing Jiaotong University, Professor

Biography:

Wang Wei is a Professor at the School of Computer Science and Technology, Beijing Jiaotong University, and a recipient of the National High-level Young Talents Program of China. His research interests include facial image and video generation and editing, as well as sequence model architectures. He has published over 40 papers in IEEE Transactions and top-tier CCF-A conferences and journals. He has served as an Area Chair for international conferences such as ICIP and ICMR. He has received several honors, including a Best Paper Nomination at ACM Multimedia, the ICCV Outstanding Doctoral Consortium Award, and the Best PhD Thesis Award from the Italian Association for Computer Vision, Pattern Recognition, and Machine Learning.

Mengshi Qi

Beijing University of Posts and Telecommunications, Professor

Biography:

Mengshi Qi, a Professor and doctoral supervisor at the School of Computer Science, Beijing University of Posts and Telecommunications. He received the Ph.D. from Beihang University and has visited with the University of Rochester in the United States. He has worked as a postdoctoral researcher at CVLAB of Ecole Polytechnique Federale de Lausanne (EPFL) in Switzerland and as a visiting researcher at Baidu Research. He has been selected for the 7th China Association for Science and Technology Youth Talent Support Program (Chinese Association for Artificial Intelligence) in 2021 and Xiaomi Young Scholar in 2024. His main research directions are artificial intelligence, computer vision and multimedia intelligent computing, etc., and he has published the papers more than 40 including the top-tier CCF-A conference CVPR/ICCV/ ECCV NeurIPS/ACM MM/AAAI and journal TPAMI/TIP/TMM TCSVT/TIFS, etc. He has served as an Area Chair for AAAI、IJCAI、ICME and guest editor for TMM.

Presenters

Hongkai Xiong

Shanghai Jiaotong University, Professor

Biography:

Hongkai XIONG is a Cheung Kong Professor and a Distinguished Professor at both Shanghai Jiao Tong University (SJTU) and East China Normal University (ECNU). Currently, he is the dean of the School of Communication and Electronic Engineering at East China Normal University (ECNU). From 2018-2020, he acted as the Vice Dean of Zhiyuan College in SJTU. From 2007 to 2008, he was a Research Scholar in the Department of Electrical and Computer Engineering, Carnegie Mellon University (CMU), Pittsburgh, PA, USA. During 2011-2012, he was a Scientist with the Division of Biomedical Informatics at the University of California (UCSD), San Diego, CA, USA. Dr. Xiong’s research interests include information theory and coding, signal processing, multimedia communication and networking, and machine learning. He published over 390 refereed journal and conference papers. In 2018, he was the recipient of the Shanghai Youth Science and Technology Distinguished Accomplishment Award. In 2017, he received the Science and Technology Innovative Leader Talent Award. In 2014, he received National Science Fund for Distinguished Young Scholar Award from Natural Science Foundation of China (NSFC). He was the co-author of the TOP 1% Paper Award of the ACM International Conference on Multimedia (ACM Multimedia’22), the Top 5% Award at the IEEE International Conference on Image Processing (IEEE ICIP’24), etc. He is a Fellow of the IEEE.

Speech Title: Figure out the Nature of Scientific Research - Representation, Understanding; Generation

Abstract:The report aims to explore the differences between thinking and intelligence as well as technological development. Firstly, starting from the representation of information and predictive reasoning, the mathematical principles and technical ideas of neural networks and large models will be described, and then the core technologies: generation, Tokenizer, cluster communication, and understanding decision-making will be introduced. Finally, the latest research and trends in intelligent and information science will be summarized.

Rongrong Ji

Xiamen University, Professor

Biography:

Rongrong Ji, a Professor and doctoral supervisor at Xiamen University, is currently the assistant to the president of Xiamen University, the director of the Science and Technology Department, the executive director of the Institute of Artificial Intelligence, and the director of the Key Laboratory of the Ministry of Education. Recipient of the National Science Fund for Distinguished Young Scholars and the Special Government Allowance of The State Council. For a long time, he has been engaged in the research of cutting-edge technologies in the field of artificial intelligence. In recent years, he has published over a hundred long articles in top journals and conferences in the field, with nearly 30,000 citations on Google Scholar. He won the First Prize of Technological Invention of the Ministry of Education in 2016, the First Prize of Science and Technology Progress of Fujian Province in 2018, 2020 and 2023, co-chair of the National Standard Working Group for Artificial Intelligence, and chair of the Expert Group for Artificial Intelligence of Fujian Province.

Speech Title: Efficient Training and Compression of Multimodal Large Models

Abstract:This report introduces a series of innovative technologies for efficient compression and acceleration of large-scale pre-trained models. To address the challenges posed by limited computational resources in the practical deployment of multimodal large models, we propose a comprehensive technical solution spanning model adaptation, parameter compression, and inference acceleration. Key contributions include: Mixed-modality-based efficient vision-language instruction tuning (LaVIN); Parameter and computation-efficient transfer learning (PCETL); A dynamic sparsification scheme without retraining; Dynamic routing with expert architectures; Affine transformation-based model quantization (AffineQuant); Distribution-fitting visual token pruning (FitPrune). These techniques significantly reduce computational overhead and storage requirements while maintaining model performance. They have been successfully deployed on various domestic computing platforms and mobile devices, providing critical technical support for the widespread application of large models.

Jun Yu

Harbin Institute of Technology Shenzhen, Professor

Biography:

Recipient of the National Science Fund for Distinguished Young Scholars, he is currently the dean of the School of Intelligent Science and Engineering at Harbin Institute of Technology (Shenzhen), a second-level professor and doctoral supervisor. Bachelor's and doctoral degree from the College of Computer Science, Zhejiang University, and postdoctoral degree from Nanyang Technological University, Singapore. He/She has been respectively selected for the National Science Fund for Distinguished Young Scholars and the Youth Yangtze River Program and other talent programs. He/She has presided over key projects of the National Natural Science Foundation of China, key research and development projects of the Ministry of Science and Technology, and provincial outstanding young talent projects, etc. Devoted to the research of image processing and analysis, as well as multimodal content understanding. More than 100 papers of IEEE/ACM Trans and CCF Class A have been published, over 30 national invention patents have been authorized, and Google citations have exceeded 23,000 times. Served as an editorial board member of IEEE TMM, TCSVT, Pattern Recognition and other journals. As the first author, I have won the Best Paper awards from IEEE TMM, TIP, TCYB and other journals, and received the First Prize of the Provincial Natural Science Award in 2021 (ranked first). The achievements have been implemented in People's Daily, Alibaba and other places.

Speech Title: Multimodal perception and autonomous decision-making for low-altitude embodied agents

Abstract:In recent years, the rapid development of multimodal models has significantly promoted the improvement of embodied agents' capabilities in perception and understanding, motion planning, and autonomous decision-making. As a typical application form, low-altitude autonomous intelligent unmanned aerial vehicles (UAVs) have received extensive attention against the backdrop of the rapid development of the low-altitude economy. However, its perception accuracy in complex environments, autonomous navigation and control decision-making capabilities still face many challenges. To enhance the intelligence level of the system, the academic and industrial circles have been continuously conducting in-depth research on key technologies such as multimodal perception and autonomous decision-making. The report will systematically sort out the latest research progress of embodied intelligence at low altitudes and explore the future development trends and key technical paths.

Yu Zhou

China University of Mining and Technology, Associate Professor

Biography:

Zhou Yu, Ph.D./Postdoctoral Fellow, Associate Professor, Master's Supervisor, Deputy Director of the Department of Electronic Information Engineering in China University of Mining and Technology, “Smart Foundation” Outstanding Teacher of the Ministry of Education and Huawei, Pioneer Teacher of Huawei Cloud and Computing, Outstanding Young Backbone Teacher of China University of Mining and Technology. Mainly engaged in research in the fields of artificial intelligence and computer vision. Has published over 40 papers in renowned journals and conferences in the field; has applied for over 20 national invention patents; has presided over more than 10 teaching and research projects such as National Natural Science Foundation projects, Jiangsu Provincial Natural Science Foundation projects, Collaborative Education and Industry Cooperation Projects of the Ministry of Education, and enterprise commissioned horizontal projects; guided students to win the championship of the CCF-A class international top-level academic conference CVPR 2025 NTIRE challenge. Younger Editor of the First Zone TOP Journal “International Journal of Mining Science and Technology”, Young Editor of “Journal of Jiangsu University”, Member of IEEE/CCF/CSIG/SPS.

Speech Title: Visual Quality Assessment for AI-generated Digital Humans

Abstract:With the rapid development of generative artificial intelligence and large model technologies, the visual quality of the generated digital humans has become a key bottleneck restricting the development of virtual reality and digital entertainment fields. This report will first systematically review the development history of AI-generated content quality assessment, then focus on explaining the reasons for the degradation and the types and characteristics of distortion of AI-generated digital humans, and systematically introduce a series of research results of our team based on human visual characteristics in generative Talking Head and interactive digital humans. Finally, combined with the future development trend of generative artificial intelligence, the challenges and research opportunities of digital human quality evaluation will be introduced.